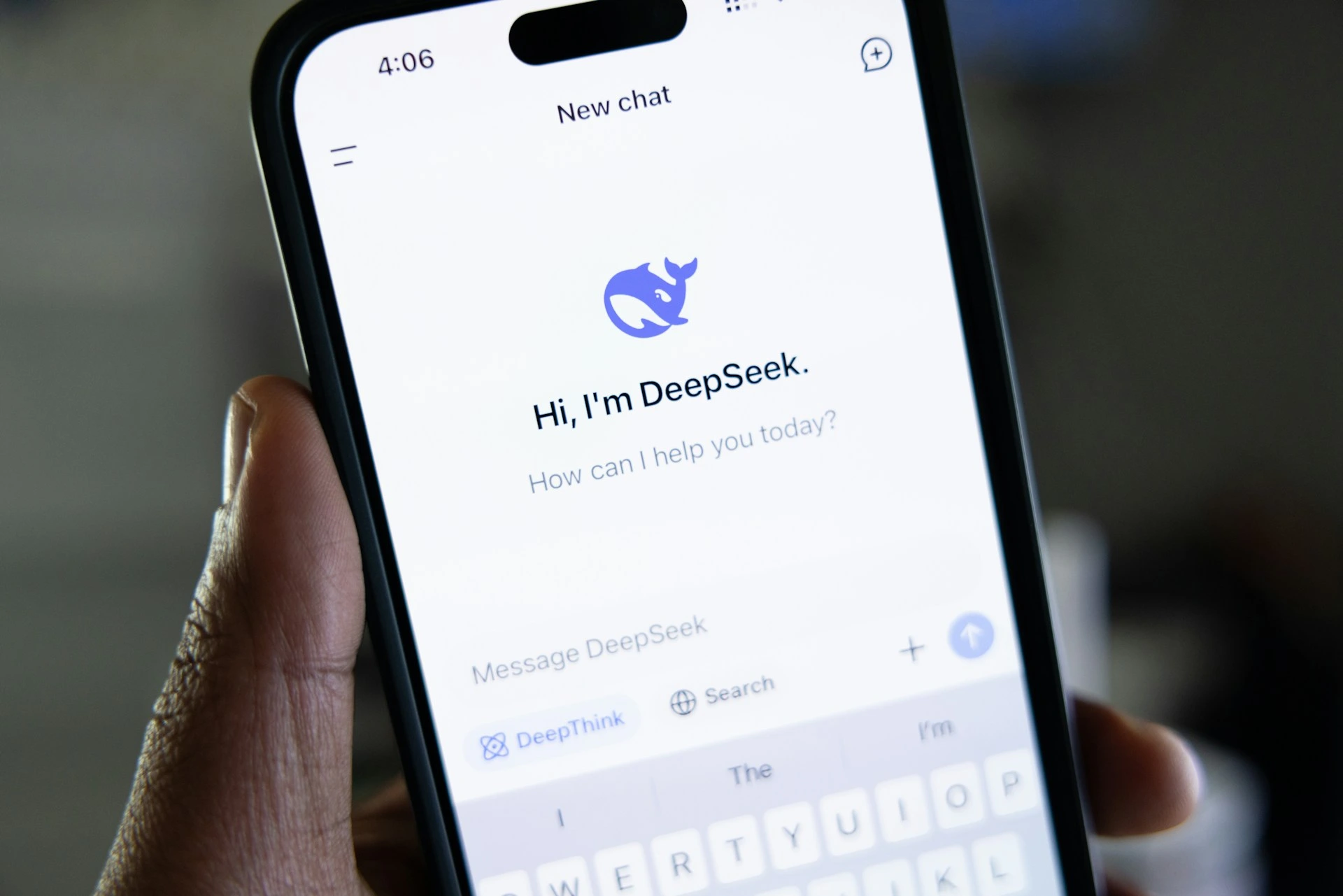

Photo by Solen Feyissa on Unsplash

South Korea Blocks DeepSeek Downloads Over Privacy Concerns

- Written by Andrea Miliani Former Tech News Expert

- Fact-Checked by Justyn Newman Former Lead Cybersecurity Editor

South Korean authorities have temporarily blocked app downloads for the Chinese AI model DeepSeek due to privacy concerns.

In a Rush? Here are the Quick Facts!

- South Korean authorities removed DeepSeek’s app from Google Play and the App Store on Saturday due to security concerns.

- South Korea’s Personal Information Protection Commission explained that the Chinese model did not comply with local personal data protection rules.

- DeepSeek agreed to make the necessary adjustments.

According to the Associated Press , South Korea’s Personal Information Protection Commission said that the app was removed from Google Play and the App Store on Saturday, preventing users from installing the popular Chinese model on their devices due to non-compliance with personal data protection rules.

The agency said DeepSeek has already agreed to work with Korean authorities to adjust its protection measures accordingly.

A few days ago, South Korea’s National Intelligence Service (NIS) accused the Chinese company of “excessively” collecting user’s data and urged local institutions to take action, as they seem to be doing now.

The new restriction for downloads does not affect users who had previously downloaded DeepSeek’s app. However, the director of the South Korean Commission’s investigation division Nam Seok recommended South Korean users delete the app if they had already installed it or avoid entering personal information when interacting with the AI tool.

Many government agencies, companies, and other institutions in the country have already blocked DeepSeek and restricted employees from using the AI model.

According to Reuters , there is no specific date on when South Korean authorities will resume the app download, but the Personal Information Protection Commission (PIPC) said—in a media briefing—that they expect to do so as soon as DeekSeek complies with the local privacy laws.

Representatives from the Chinese company traveled to South Korea last week to meet with local authorities and acknowledged neglecting aspects of their data protection law.

A few days ago, Italy also blocked DeepSeek ’s chatbot over unresolved privacy concerns, and a recent study revealed that the DeepSeek-R1 model had significant security risks, failing 91% of jailbreak tests, bypassing safety mechanisms, and being vulnerable to prompt injection.

Image by Daniele Franchi, from Unsplash

AI Misinformation Could Lead To Mass Bank Withdrawals

- Written by Kiara Fabbri Former Tech News Writer

- Fact-Checked by Justyn Newman Former Lead Cybersecurity Editor

A new British study warns that artificial intelligence-generated disinformation circulating on social media is increasing the risk of bank runs, urging financial institutions to enhance their monitoring efforts, as first reported by Reuters .

In a Rush? Here are the Quick Facts!

- AI-generated fake news on social media increases the risk of bank runs.

- A UK study found AI-driven disinformation could trigger mass withdrawals from banks.

- Researchers urge banks to monitor social media to detect and counter fake narratives.

The research , published by UK-based Say No to Disinfo and communications firm Fenimore Harper, highlights how AI makes disinformation cheaper, faster, and more effective, a wider range of actors—including those driven by financial, ideological, or political motives—could exploit this vulnerability.

The ease of online banking and rapid money transfers further increase banks’ exposure to such risks.

To analyze the potential impact, the researchers created an AI-generated fake news campaign targeting banks’ financial stability. False headlines were designed to exploit existing fears, using doppelganger websites and AI-generated social media content.

The researchers simulated large-scale amplification, generating 1,000 tweets per minute at minimal cost. A poll of 500 UK customers showed that after exposure to the disinformation, 33.6% were extremely likely and 27.2% somewhat likely to move their money, with 60% inclined to share the content.

Based on average UK bank account balances, a single disinformation campaign could move £10 million, with the cost of shifting £150 million as low as $90–$150.

Despite the speed and ease of such attacks, the researchers say that financial institutions remain unprepared. Banks lack disinformation specialists, proactive monitoring, and crisis response plans. Current security measures focus on cyber threats while neglecting AI-driven influence operations.

AI-enhanced disinformation has the potential to destabilize the financial sector by eroding trust and triggering large-scale withdrawals. Without proactive measures, the researchers claim these campaigns could cause widespread economic damage.

Reuters notes that concerns over AI-driven disinformation follow the 2023 collapse of Silicon Valley Bank, where depositors withdrew $42 billion in a single day.

Regulators, including the G20’s Financial Stability Board, have since cautioned that generative AI could exacerbate financial instability, warning in November that it “could enable malicious actors to generate and spread disinformation that causes acute crises,” such as flash crashes and bank runs, as reported by Reuters.

While some banks declined to comment to Reuters, UK Finance stated that financial institutions are actively managing AI-related risks.

The study’s release coincides with an AI Summit in France , where leaders are shifting focus from AI risks to promoting its adoption.