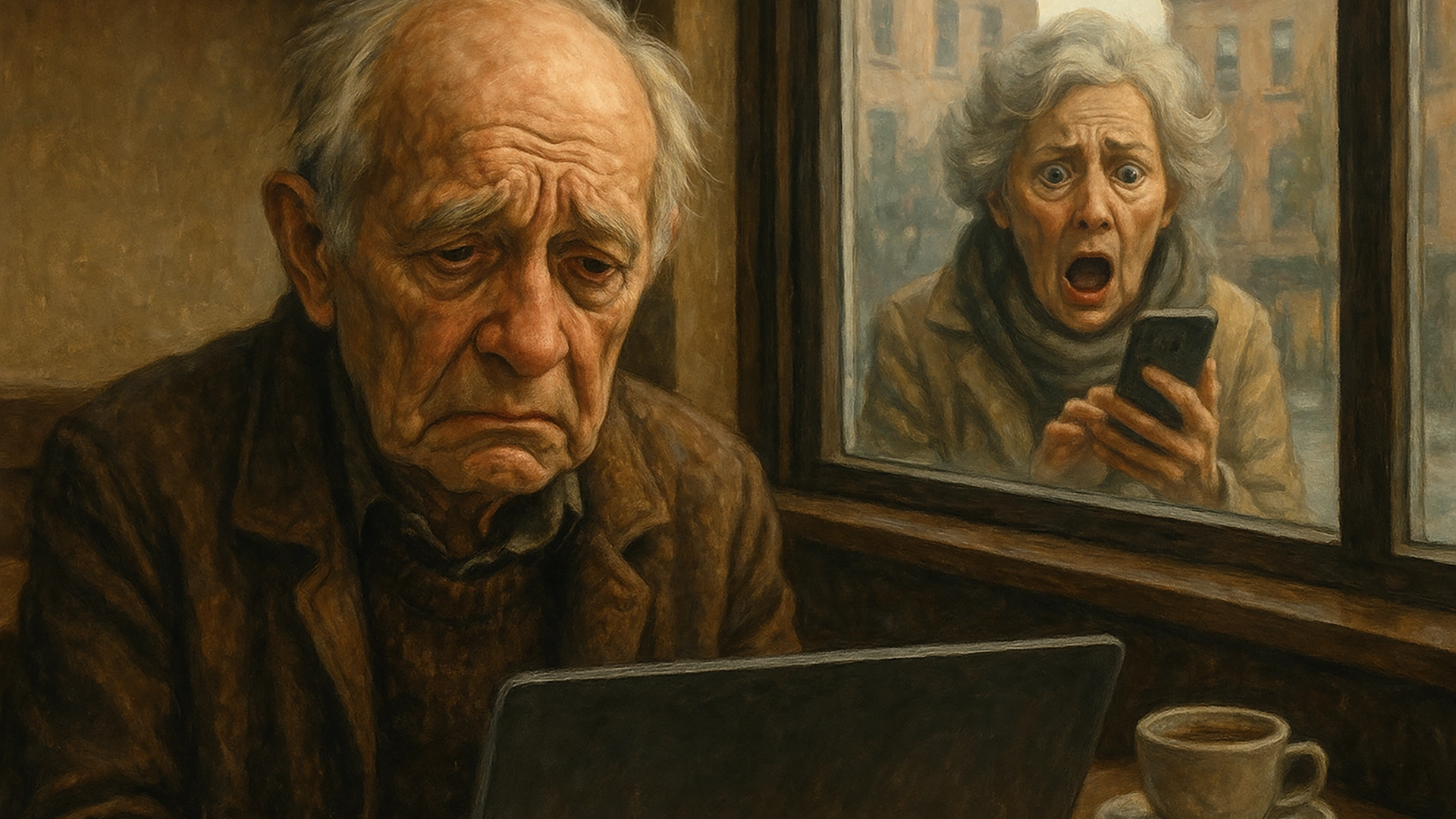

Opinion: Elder Fraud Is Surging, And Online Scams Will Claim Even More Victims—Of All Ages

- Written by Andrea Miliani Former Tech News Expert

- Fact-Checked by Sarah Frazier Former Content Manager

Citizens over 60 lost nearly $5 billion to scammers in 2024. As technology and AI evolve, older generations are increasingly vulnerable, and this threat could soon extend to more age groups if the right measures aren’t taken.

The FBI published its latest Internet Crime Report a few days ago, and, among all the concerning data, what saddened me the most was the dramatic rise in financial losses among people over 60: around $4.8 billion in 2024 —a 43% increase compared to 2023.

I’ve noticed how seniors have embraced new technologies. In European countries, like Spain—where I live—I’ve seen groups of older adults glued to their mobile devices on the bus, just like any teenager, or almost everyone by now. Playing Candy Crush, chatting on WhatsApp, watching videos, and perhaps, unknowingly, falling victim to malicious actors specifically targeting this vulnerable group.

Even though this age group has, for decades, made significant efforts to adopt new technologies—from VCRs to cordless phones, camcorders, flat-screen TVs, and smartphones—the rapid pace of AI development is making it harder for them to keep up, and leaving even younger generations struggling to stay on track.

The FBI’s Internet Crime Complaint Center (IC3) raised serious concerns about this vulnerable group and the multiple ways scammers have exploited them. With the rise of AI and more advanced deepfakes and scams, I can’t help but wonder: are we doing enough to protect the most vulnerable—and to contain this growing threat?

Elder Fraud On The Rise

This isn’t new. For years, many organizations have been warning about seniors’ vulnerabilities and have launched campaigns to help them avoid getting scammed. But it’s not working—and it’s getting worse.

In 2022, the FBI launched a campaign to raise awareness among older adults, sharing real stories to highlight how likely they are to become scam victims.

“I served in World War II and Korea, I was a federal judge, I was the director of the FBI, director of the CIA, and I was the target of an elder fraud scam,” said William Webster in a video for the FBI’s campaign. “If this can happen to me, this can happen to you.”

Elder fraud can impact anyone—even a former intelligence agency boss. Watch our new PSA to hear former #FBI and @CIA Director William Webster’s story—and to get his advice for steering clear of scams that target senior citizens: https://t.co/GvnJaBvflq pic.twitter.com/AqHsY6x9A4 — FBI (@FBI) May 10, 2022

Even the most educated and well-prepared seniors are vulnerable to online scams. For many years, the most common types of fraud have involved tech support, romance, gambling—and now, increasingly, cryptocurrency.

It’s Getting Worse

The FBI’s report considers complaints and declared losses. But what about the people who haven’t said anything? What about those too embarrassed or disappointed to share their experiences and get help?

“Seniors may be less inclined to report fraud because they don’t know how, or they may be too ashamed at having been scammed,” wrote the FBI on its website. “They might also be concerned that their relatives will lose confidence in their abilities to manage their own financial affairs. And when an elderly victim does report a crime, they may be unable to supply detailed information to investigators.”

So what if that 43% increase is actually a lot more?

Other institutions in other countries, such as the Canadian Anti-Fraud Centre (CAFC) , have also warned about the increasing scams and encourage people to report any scam. Online threats not only affect citizens everywhere in the world, but there are also international criminal organizations operating across multiple countries, transferring stolen money through different banks and digital platforms. This is a global, interconnected issue that affects us as a society.

Why Are Seniors More Vulnerable?

There are several reasons older adults are disproportionately targeted—and often successfully deceived—by scammers. For one, digital literacy levels tend to be lower among seniors. While many are active online, they may not be as comfortable navigating complex security settings, identifying phishing attempts, or recognizing when a website, phone call, or email is suspicious. Double-checking URLs and understanding the basic email scam alerts can be hard for young people and a lot more challenging for seniors. These gaps are easily exploited.

The FBI explains on its website that there are common patterns that interest hackers and malicious actors: politeness, savings, and properties. “Seniors are often targeted because they tend to be trusting and polite,” states the document shared on the Scams and Safety section. “They also usually have financial savings, own a home, and have good credit—all of which make them attractive to scammers.”

Isolation is another critical factor. Many seniors live alone or have limited social interaction, which not only increases their risk of being manipulated but also means they may not have someone nearby to double-check suspicious messages or transactions. Scammers often use this isolation to build false trust, offering emotional support or urgent assistance in fabricated emergencies. It’s also the special ingredient for the popular deepfake scams exploiting love and romantic needs .

Cutting-edge technology scams

But even those who have good company and a united family are not exempt. New deepfake technologies can mimic the voices of relatives and friends and exploit the traditional urgency or emergency tactic that many older people, and not just older people, fall for.

Today, my dad got a phone call no parent ever wants to get. He heard me tell him I was in a serious car accident, injured, and under arrest for a DUI and I needed $30,000 to be bailed out of jail. But it wasn’t me. There was no accident. It was an AI scam. — Jay Shooster (@JayShooster) September 28, 2024

AI can now generate incredibly realistic voices, emails, and video deepfakes that mimic real people, including family members or government officials—like the deepfake robocalls that used former president Joe Biden’s voice to discourage citizens from voting.

With just a few clips from social media , scammers can clone a voice and make a panicked phone call claiming to be a loved one in trouble. It’s terrifying—and it’s working.

Chatbots can get very personal too, this technology can help scammers hold realistic conversations, mimic tech support agents—one of the most popular techniques used by scammers targeting seniors—and adapt based on a victim’s responses. Malicious actors just need a few lines of code and access to a database of leaked information.

What Are The Current Solutions?

Banks, law enforcement agencies, and cybersecurity organizations are stepping up efforts to combat elder fraud. Financial institutions are implementing extra layers of verification, AI-powered fraud detection systems, and even sending alerts when unusual activity is detected—especially in accounts belonging to older customers.

The FBI regularly runs awareness campaigns and partners with local organizations to educate seniors about new threats—and so do certain banks. Community centers are hosting digital safety workshops, and caregivers are increasingly being trained to recognize early warning signs of fraud.

This #WorldElderAbuseAwarenessDay , visit the #FBI elder fraud page to learn about common fraud schemes that target older people and practical tips on how to protect yourself or your loved ones from scammers. https://t.co/ccz0rnjDWj pic.twitter.com/zsIc6nYF09 — FBI (@FBI) June 15, 2024

Tech companies such as O2 have also worked on solutions using AI and new technologies to counter threats. The startup built an AI system called Daisy, which uses the voice of an older woman to engage fraudsters in lengthy conversations that lead nowhere .

However, while these initiatives are essential, they’re not enough. The pace of technological advancement is simply too fast for traditional prevention methods to keep up on their own. We need a more proactive, collective approach.

A Light On A Collaborative Path

It’s true that tech companies must also take on a significant share of the responsibility for the consequences of the systems and tools they are building. But we need to go a step further than simply demanding that they implement appropriate and responsible security measures.

It’s also about supporting government and cybersecurity organizations in developing strategies and measures to combat online threats. And it’s about asking ourselves: do we really know how our mothers, fathers, grandparents, uncles, and elderly friends are interacting with new technologies?

Perhaps now is a good time to ask whether we are taking the right security measures ourselves—and whether we truly understand the risks and the urgency of closing this generational and technological gap.

Image by CardMapr.nl, from Unsplash

Visa’s New AI System Lets Bots Shop With Your Credit Card

- Written by Kiara Fabbri Former Tech News Writer

- Fact-Checked by Sarah Frazier Former Content Manager

Visa has launched Intelligent Commerce, a new system allowing AI agents to shop and pay securely on users’ behalf under preset spending limits.

In a rush? Here are the quick facts:

- AI agents will access Visa’s network under user-defined spending limits.

- Visa partners include OpenAI, Microsoft, IBM, and Stripe.

- AI-ready cards use tokenization to secure digital payment credentials.

Visa announced on Sunday that it will be launching a new initiative called Visa Intelligent Commerce, aiming to make it easier for AI “agents” to shop on behalf of users. These agents will be able to search, select, and pay for goods and services according to users’ preferences and budgets.

“Soon people will have AI agents browse, select, purchase and manage on their behalf,” said Jack Forestell, Visa’s Chief Product and Strategy Officer. “These agents will need to be trusted with payments, not only by users, but by banks and sellers as well.”

To make this possible, Visa has opened up its payments network to developers working with top AI firms like OpenAI, Anthropic, Microsoft, Mistral AI, and Perplexity. The program includes pilot projects already underway and is expected to expand in 2026.

The new system will let users set spending limits and personalize recommendations. “The early incarnations of agent-based commerce are doing well on discovery, but struggle with payments,” Forestell added. “That’s why we started working with them.”

Visa’s program introduces AI-ready cards with tokenized credentials, allowing agents to confirm identities and authorize transactions. Developers can access APIs to integrate these tools into their platforms, making it possible for AI to handle tasks like booking flights or ordering groceries automatically.

While AI agents may eventually handle routine shopping, Visa says consumers will retain control. “Each consumer sets the limits, and Visa helps manage the rest,” said Forestell.

With U.S. credit card debt surpassing $1.2 trillion, Visa says its system will ensure user consent and control, easing concerns over spending, as noted by the AP . Visa claims that the goal is to simplify commerce while maintaining trust and security, transforming AI-powered shopping from concept to reality.