Image by Emiliano Vittoriosi, from Unsplash

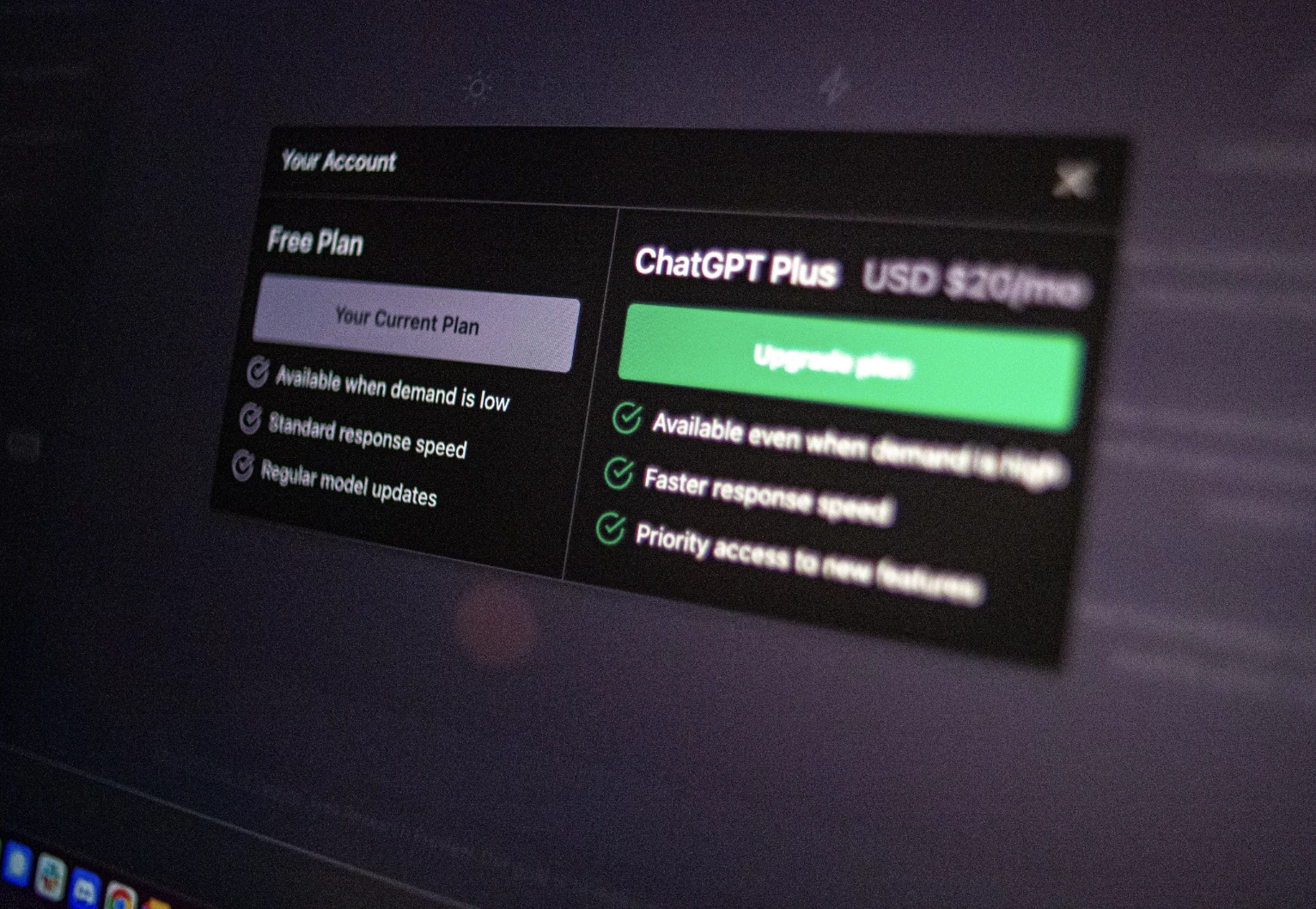

OpenAI Faces 15 Million Fine For Data Privacy Violations In Italy

- Written by Kiara Fabbri Former Tech News Writer

- Fact-Checked by Justyn Newman Former Lead Cybersecurity Editor

OpenAI has been fined 15 million euros by Italy’s data protection authority -Il Garante per la protezione dei dati personali- following an investigation into the management of its ChatGPT service, as reported by local news agency ANSA .

In a Rush? Here are the Quick Facts!

- Investigations revealed OpenAI mishandled data, lacked proper legal basis for training ChatGPT.

- Italy mandates OpenAI run a six-month public awareness campaign on data usage.

- OpenAI plans to appeal the fine, calling it disproportionate to its Italian revenue.

The penalty was imposed due to a series of violations, including the lack of notification regarding a data breach that occurred in March 2023, the improper use of user data to train the AI without adequate legal grounds, and a failure to meet transparency obligations regarding user information, says ANSA.

The investigation, launched in March 2023, was prompted by concerns over how OpenAI collects and processes personal data. It was further influenced by a recommendation from the European Data Protection Board (EDPB), which sought a unified approach to handling data in the context of AI services, reported ANSA.

Among the specific issues identified were inadequate mechanisms for age verification, leading to potential risks for minors under 13 years of age, who could be exposed to inappropriate content , according to ANSA.

In response to these findings, Il Garante has mandated OpenAI to implement a six-month institutional communication campaign across various media channels, including radio, television, newspapers, and the internet, as reported by ANSA.

The goal of this campaign is to raise public awareness about how ChatGPT works, particularly regarding data collection and user rights, such as the right to object , rectify, or erase personal data, says ANSA.

OpenAI has expressed its intention to appeal the decision, stating that the fine is disproportionate to the company’s revenue generated in Italy during the same period, reports ANSA. The company highlights its previous cooperation with the Garante, citing the successful reactivation of ChatGPT in Italy just a month after a 2023 suspension order was issued, as reported by ANSA.

Image by pvproductions, from Freepi

AI-Generated Malware Variants Evade Detection In 88% Of Cases

- Written by Kiara Fabbri Former Tech News Writer

- Fact-Checked by Justyn Newman Former Lead Cybersecurity Editor

Recent research has revealed that AI could potentially generate up to 10,000 new variants of malware, evading detection in 88% of cases, as reported by The Hacker News .

In a Rush? Here are the Quick Facts!

- LLMs rewrite malware to evade detection by creating natural-looking code variations.

- Researchers’ iterative rewriting algorithm maintains malicious functionality while bypassing detection systems.

- LLM obfuscation outperforms traditional tools by mimicking original code more effectively.

This breakthrough highlights the growing risks of using large language models (LLMs) for malicious purposes.

The research , led by cybersecurity experts at Palo Alto Networks, employed an adversarial machine learning algorithm to create new, undetectable forms of malware. By using LLMs to rewrite malicious JavaScript code, the team was able to generate thousands of novel variants without altering the core functionality of the malware.

The primary challenge was to bypass the limitations of traditional malware detection tools, which often struggle with obfuscation techniques like variable renaming or code minification.

One of the most concerning findings was that these AI-generated variants could easily avoid detection by security tools such as VirusTotal, which only flagged 12% of the modified samples as malicious.

The LLM’s ability to perform multiple, subtle code transformations—such as dead code insertion, string splitting, and whitespace removal—made it possible for attackers to rewrite existing malware into a form that was nearly indistinguishable from benign code.

These transformations were so effective that even deep learning models failed to detect them, lowering the malicious score from nearly 100% to less than 1%.

The research also highlighted a significant advantage of LLM-based obfuscation over traditional tools. While existing malware obfuscators are widely known and produce predictable results, LLMs create more natural-looking code, making it much harder for security systems to identify malicious activity.

This organic transformation makes AI-generated malware more resilient to detection, underscoring the importance of adapting detection strategies in response to evolving threats.

To counter these sophisticated LLM-based attacks, the research team implemented a defensive strategy by retraining their malicious JavaScript classifier using tens of thousands of LLM-generated samples.

This retraining improved detection rates by 10%, significantly enhancing the ability to identify newly generated malware variants. Despite these efforts, the findings underscore the urgent need for continuous innovation in cybersecurity to keep pace with the evolving capabilities of AI-driven cybercrime.

Moreover, a parallel surge in macOS-targeted malware has been driven by generative AI tools . As macOS market share grew 60% in three years, malware-as-a-service (MaaS) made it cheaper and easier for attackers to target sensitive data, like cryptocurrency wallets and Keychain details.

Additionally, AI-powered robots have become a potential security concern . Researchers discovered that jailbreaking AI-controlled robots could lead to dangerous actions, such as crashing self-driving cars or using robots for espionage.

The development of RoboPAIR, an algorithm that bypasses safety filters, demonstrated a 100% success rate in manipulating robots to perform harmful tasks, including weapon use and locating explosive devices.

As cybercriminals increasingly leverage AI for more sophisticated attacks , organizations and individuals alike must stay vigilant, continuously update their defense