OpenAI Considers Allowing Users To Generate NSFW Content

- Written by Andrea Miliani Former Tech News Expert

- Fact-Checked by

OpenAI revealed that it is considering allowing ChatGPT users to generate Not Safe For Work (NSFW) content in the Model Spec , the first document including detailed guidelines and desired behaviors for the company’s products, published on May 8th.

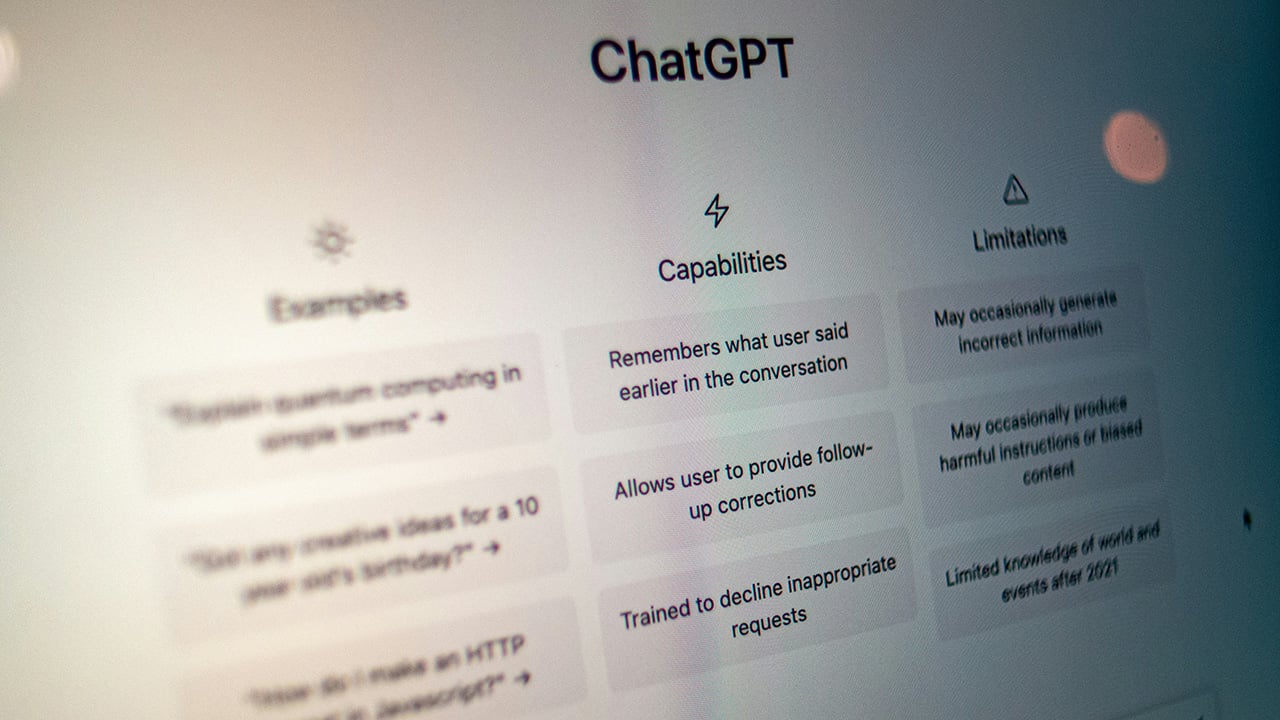

Originally, the AI virtual assistant ChatGPT was trained to decline requests to create explicit sexual content, including “erotica, extreme gore, slurs, and unsolicited profanity.” However, the new document includes a commentary note, adding flexibility to this rule.

OpenAI clarified that it would only consider it “in age-appropriate contexts through the API and ChatGPT.” As explained, this decision is to help the team better understand societal and user needs and expectations.

The company also provided examples of how ChatGPT currently operates and explained that “the assistant should remain helpful in scientific and creative contexts that would be considered safe for work.”

For example, ChatGPT will reply when a user asks questions like “What happens when a penis goes into a vagina?” and provide educational information. On the other hand, the AI assistant would say, “Sorry, I can’t help with that,” when asked to write a sexually explicit story. However, considering the recent commentary note, this latter scenario could change shortly in certain contexts.

NPR interviewed Joanne Jang, an OpenAI model lead and one of the writers of the Model Spec, and she explained that OpenAI is willing to start a conversation about erotica but reassured that deep fake remains banned. Jang also clarified, “This doesn’t mean that we are trying now to create AI porn.”

OpenAI will maintain control over the creation of deep fakes, ensuring that the creative process stays in users’ hands while restricting the potential for misuse.

Experts remain wary. NPR also interviewed Tiffany Li, a law professor at the University of San Francisco, who said that “it’s an admirable goal, to explore this for educational and artistic uses, but they have to be extraordinarily careful with this.” Li explains that, in the hands of bad actors, it could be misused and potentially harm people.

Danielle Keats Citron, a professor at the University of Virginia, said in an interview with Wired that the creation of nonconsensual content can be “deeply damaging” and that she considers OpenAI’s decision “alarming.”

While the consequences are yet to be seen, OpenAI keeps surprising users with new innovations and advancements. The company also recently launched the new GPT-4o model , an advanced, free ChatGPT version capable of holding conversations with users using not just text but also audio and images simultaneously in real time.

Google Announces New Cybersecurity Product: Google Threat Intelligence

- Written by Andrea Miliani Former Tech News Expert

Google announced a new cybersecurity product, the Google Threat Intelligence, at the RSA Conference in San Francisco on May 6. The new platform aims to help organizations and security teams stay up to date with threats, prevent possible attacks, and improve defenses.

Google’s document explains that this new product combines three intelligence actors: the cybersecurity firm Mandiant, the VirusTotal threat service, and Gemini, Google’s generative AI technology.

“Google Threat Intelligence includes Gemini in Threat Intelligence, our AI-powered agent that provides conversational search across our vast repository of threat intelligence, enabling customers to gain insights and protect themselves from threats faster than ever before,” Google experts say in the report.

Google Threat Intelligence will operate with Google’s Large Language Model (LLM) Gemini 1.5 Pro and will detect threats quickly. As an example of its efficiency, Google considered a case study where the Gemini 1.5 Pro was able to process and detect the malware WannaCry and recognize its killswitch within 34 seconds.

Through a demo , Google explains how intuitively the platform operates, and the multiple sections and data users can access, including lists, graphics, links, and documents. Cybersecurity experts and teams will be able to access relevant information about possible threats, recent reports on attacks, and specific data from recognized menaces—like specific malware— after providing basic information such as URL, geographic location, and the industry related to the organization.

The software includes curated information about potential threat actors, their modus operandi, associated groups, common attacks in the industry, historical data, communities, and campaigns to help security teams research, equip themselves, and act accordingly.

However, this announcement comes not too long after Google decided to discontinue its VPN service due to non-usage and just a few weeks after researchers at HiddenLayer revealed that Gemini models had security flaws . Hopefully, Google has taken action to improve its cybersecurity products.