Photo by Agustin Diaz Gargiulo on Unsplash

Meta Withdraws Generative AI Services in Brazil Over Government Objections

- Written by Andrea Miliani Former Tech News Expert

- Fact-Checked by Justyn Newman Former Lead Cybersecurity Editor

Meta Platforms decided to suspend its AI services in Brazil after the Brazilian government objected to the tech company’s policies on AI and the use of personal data to train AI models.

According to Reuters , Meta announced its decision this Wednesday, and it will affect its 200 million users. Brazil’s audience represents Meta’s second-largest user base, after India.

Meta is in discussion with Brazil’s National Data Protection Authority (ANPD) and will not provide the AI tools in this country until both parties reach an agreement.

According to the BBC , on July 2nd, the ANPD said they had to intervene over the “imminent risk of serious and irreparable damage, or difficulty repairing fundamental rights of the affected [account] holders”. The government agency gave Meta five days to comply or face daily fines of R$50,000 ($9,000).

Pedro Martins, Academic Coordinator at Data Privacy Brasil, told the BBC that the organization was especially concerned over the use of children’s and teenagers’ content with the BBC.

Meta considered the decision a “step backward for innovation” and said in a recent statement that they would address the authorities’ concerns. However, Brazil is not the first country to block Meta’s new AI policies, the European Union and the United Kingdom have also requested policy changes.

Meta announced in June that it had been working on possible multimodal AI models for Europe but recently confirmed to Axios that it will not proceed with this approach.

Governments aren’t the only organizations against Meta’s AI training policies. Apple also rejected the integration of Meta ’s chatbot with iOS, and hundreds of thousands of artists have also decided to opt out of Meta ’s platforms and join the new social media app for artists Cara.

PrivacyLens: New Camera Protects Privacy by Turning People into Stick Figures

- Written by Kiara Fabbri Former Tech News Writer

- Fact-Checked by Justyn Newman Former Lead Cybersecurity Editor

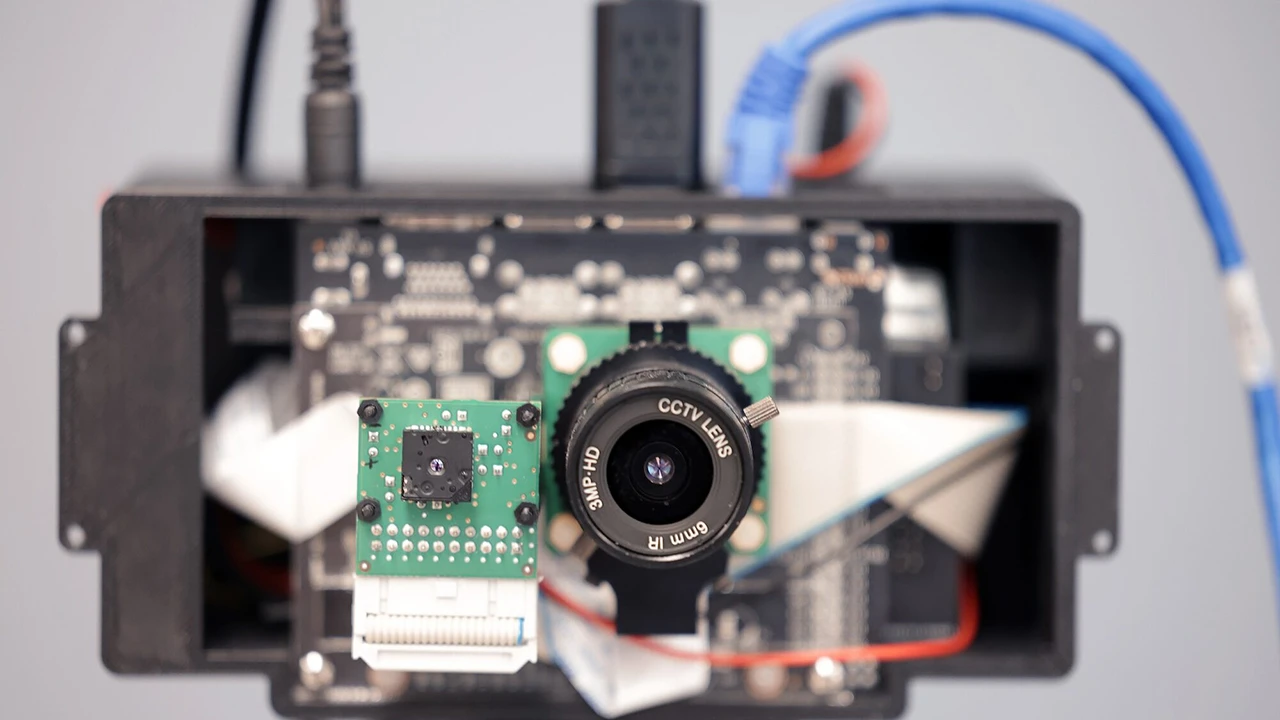

Researchers at the University of Michigan have announced the development of PrivacyLens , an innovative camera technology designed to remove personally identifiable information (PII) from images and videos. This removal happens before the information is even stored or sent anywhere. This could significantly improve privacy of smart home devices and internet-connected cameras.

PrivacyLens utilizes a combination of a standard video camera and a heat-sensing camera. The heat-sensing camera detects people based on their body temperature, and replaces their likeness with a generic stick figure that mimics their movements. This ensures that devices relying on the camera can function without revealing the identity of the individuals in view.

Moreover, PrivacyLens eliminates the storage of raw photos on devices or cloud servers, thereby further enhancing user privacy.

“Most consumers do not think about what happens to the data collected by their favorite smart home devices. In most cases, raw audio, images and videos are being streamed off these devices to the manufacturers’ cloud-based servers, regardless of whether or not the data is actually needed for the end application,” said Alanson Sample, U-M associate professor of computer science and engineering and the corresponding author of the study.

This technology has the potential to address growing concerns about privacy leaks from smart home devices. Incidents such as intimate photos captured by Roomba vacuum cleaners being leaked on social media, and the recent hacking of 150,000 Verkada security cameras, have highlighted the vulnerability of traditional camera systems. The latter incident allowed hackers to access live feeds from cameras in sensitive locations including women’s health clinics, psychiatric hospitals companies, police departments, prisons and schools.

Another notable case involved Heather Hines, a 24-year-old from Southern California, who experienced a privacy breach with her Wyze security cameras. A caching issue caused thousands of customers to see photos and videos from other people’s homes when the cameras came back online, affecting 13,000 accounts. “It made me feel violated,” said Hines to CNN, who used the cameras to monitor her sick cat.

In this context, PrivacyLens could also make patients more comfortable using cameras for chronic health monitoring at home. By replacing them with stick figures, the camera wouldn’t capture sensitive information about people in their most private spaces.

The applications of PrivacyLens extend beyond the home. For instance, car manufacturers could potentially use this technology to prevent autonomous vehicles from being used for surveillance. Additionally, companies that use cameras to collect data outdoors could use PrivacyLens to comply with privacy laws.

The researchers are currently working to improve the technology and bring it to market. While there are some limitations, such as the resolution of the thermal camera, PrivacyLens shows promise for a future where cameras can be used without compromising privacy.