Image by Anurag R Dubey, from Wikimedia Commons

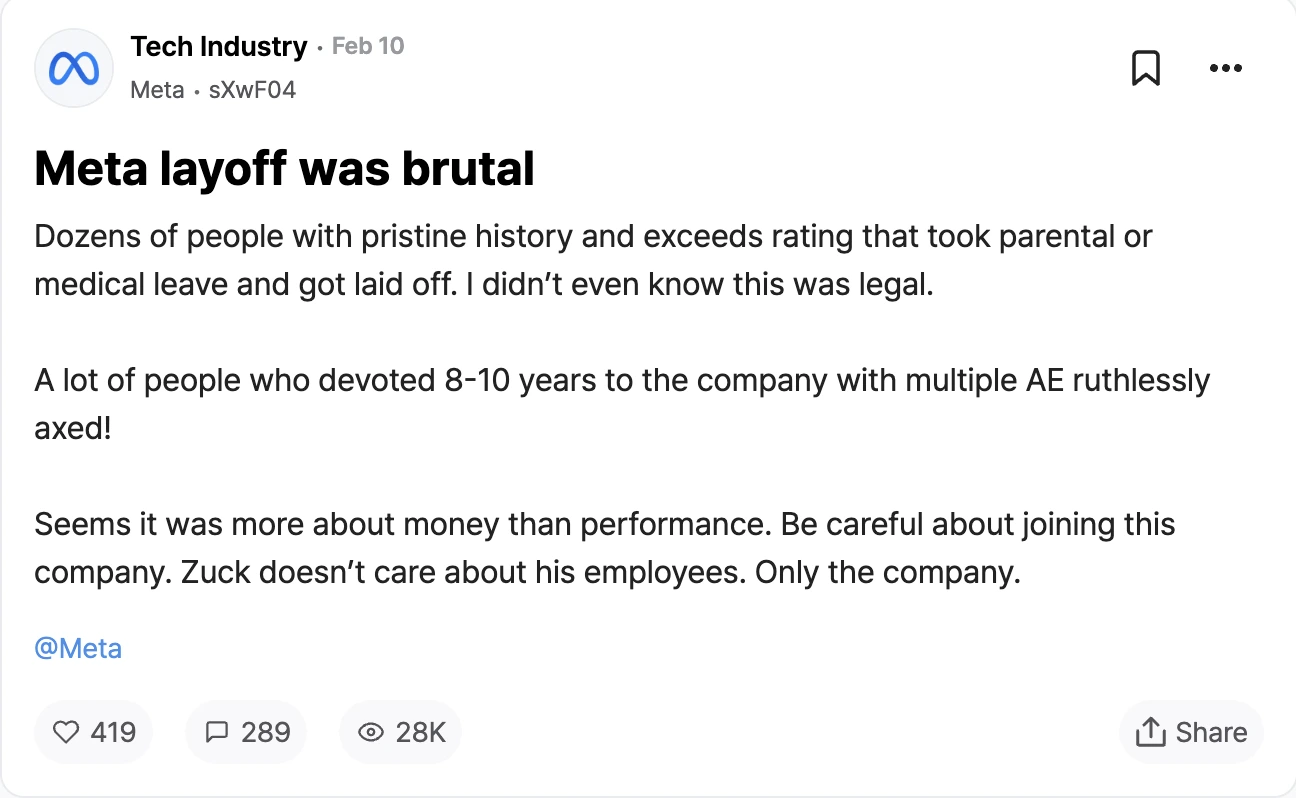

Meta Accused of Unfair Layoffs, Including Workers On Parental Leave

- Written by Kiara Fabbri Former Tech News Writer

- Fact-Checked by Sarah Frazier Former Content Manager

Meta’s recent wave of layoffs has sparked outrage among employees, with many accusing CEO Mark Zuckerberg of running the “cruelest tech company out there,” as first reported by Fortune .

In a Rush? Here are the Quick Facts!

- Meta laid off 3,600 employees, citing performance issues.

- Workers claim layoffs unfairly targeted those on parental and medical leave.

- Some affected employees had no history of underperformance.

The latest job cuts, which Meta framed as performance-based, have led to claims that some workers were unfairly labeled as low performers, including those on parental or medical leave.

The layoffs are part of Zuckerberg’s plan to cut around 3,600 jobs, roughly 5% of the workforce. In a memo last month, he justified the move as targeting underperformers, as first reported by Bloomberg . However, affected employees are pushing back, claiming they had no history of poor performance.

“The hardest part is Meta publicly stating they’re cutting low performers, so it feels like we have the scarlet letter on our backs,” one former employee told Business Insider . “People need to know we’re not underperformers,” he added.

On Blind, an anonymous forum for verified employees, Meta workers described a tense and unpredictable work environment. Some alleged that they were let go while on approved leave, raising concerns about the fairness of the process.

“[I] consistently exceeded expectations multiple years, had a baby in 2024, got laid off,” another former employee wrote. Another, who had been on maternity leave for six months, said they had “no history of below average performance” and were now seeking legal advice.

Fortune notes that the layoffs reflect a broader shift in Silicon Valley, where job security is eroding, remote work policies are tightening, and diversity initiatives are being cut. Some employees believe the industry’s leaders are deliberately reshaping corporate culture to curb worker influence.

Zuckerberg called 2023 the “year of efficiency,” continuing job cuts that began in 2022. After eliminating 11,000 positions that year, the company laid off another 10,000 employees in 2023 as part of its ongoing restructuring efforts.

Despite the layoffs, Meta’s stock has surged 60% this year, surpassing market expectations. Reuters attributes this growth to strong digital ad revenue, which continues to fund the company’s AI investments.

Meta’s deep investments in AI suggest a similar trajectory. As AI becomes more capable, the justification for performance-based layoffs may shift toward full-scale automation.

While some tech leaders claim AI will enhance human productivity , others, like Siemiatkowski, suggest that many jobs may simply disappear. For employees at companies like Meta, this raises the question of whether efficiency-driven layoffs today are just the beginning of a more extensive AI-driven workforce reduction.

This shift has also fueled concerns over corporate motivations. Some analysts argue that promoting AI-driven job cuts is as much about financial positioning as it is about technological advancement.

Klarna, for instance, aggressively pushed its AI narrative following a steep valuation drop in 2022, leading to speculation that its automation efforts were as much about investor appeal as operational efficiency.

If Meta follows this path, employees may soon face an even more uncertain future, with AI efficiency serving as a new rationale for workforce reductions.

Image by Freepik

Shadow AI Threatens Enterprise Security

- Written by Kiara Fabbri Former Tech News Writer

- Fact-Checked by Justyn Newman Former Lead Cybersecurity Editor

As AI technology evolves rapidly, organizations are facing an emerging security threat: shadow AI apps. These unauthorized applications, developed by employees without IT or security oversight, are spreading across companies and often go unnoticed, as highlighted in a recent VentureBeat article.

In a Rush? Here are the Quick Facts!

- Shadow AI apps are created by employees without IT or security approval.

- Employees create shadow AI to increase productivity, often without malicious intent.

- Public models can expose sensitive data, creating compliance risks for organizations.

VentureBeat explains that while many of these apps are not intentionally malicious, they pose significant risks to corporate networks, ranging from data breaches to compliance violations.

Shadow AI apps are often built by employees seeking to automate routine tasks or streamline operations , using AI models trained on proprietary company data.

These apps, which frequently rely on generative AI tools such as OpenAI’s ChatGPT or Google Gemini, lack essential safeguards, making them highly vulnerable to security threats .

According to Itamar Golan, CEO of Prompt Security, “Around 40% of these default to training on any data you feed them, meaning your intellectual property can become part of their models,” as reported by VentureBeat.

The appeal of shadow AI is clear. Employees, under increasing pressure to meet tight deadlines and handle complex workloads, are turning to these tools to boost productivity.

Vineet Arora, CTO at WinWire, notes to VentureBeats, “Departments jump on unsanctioned AI solutions because the immediate benefits are too tempting to ignore.” However, the risks these tools introduce are profound.

Golan compares shadow AI to performance-enhancing drugs in sports, saying, “It’s like doping in the Tour de France. People want an edge without realizing the long-term consequences,” as reported by VentureBeats.

Despite their advantages, shadow AI apps expose organizations to a range of vulnerabilities, including accidental data leaks and prompt injection attacks that traditional security measures cannot detect.

The scale of the problem is staggering. Golan reveals to VentureBeats that his company catalogs 50 new AI apps daily, with over 12,000 currently in use. “You can’t stop a tsunami, but you can build a boat,” Golan advises, pointing to the fact that many organizations are blindsided by the scope of shadow AI usage within their networks.

One financial firm, for instance, discovered 65 unauthorized AI tools during a 10-day audit, far more than the fewer than 10 tools their security team had expected, as reported by VentureBeats.

The dangers of shadow AI are particularly acute for regulated sectors. Once proprietary data is fed into a public AI model, it becomes difficult to control, leading to potential compliance issues.

To tackle the growing issue of shadow AI, experts recommend a multi-faceted approach. Arora suggests organizations create centralized AI governance structures, conduct regular audits, and deploy AI-aware security controls that can detect AI-driven exploits.

Additionally, businesses should provide employees with pre-approved AI tools and clear usage policies to reduce the temptation to use unapproved solutions.