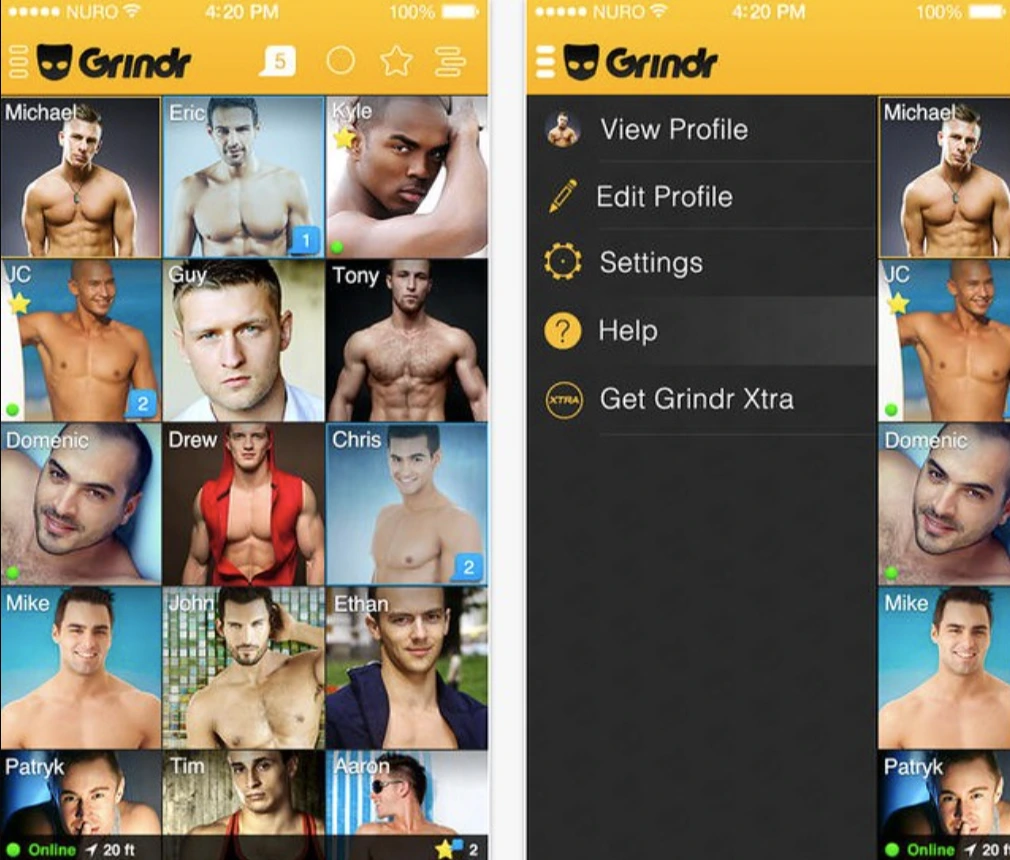

Image by Melies The Bunny, from Flickr

Grindr’s AI Wingman Aims To Streamline Dating

- Written by Kiara Fabbri Former Tech News Writer

- Fact-Checked by Justyn Newman Former Lead Cybersecurity Editor

In a Rush? Here are the Quick Facts!

- Grindr is testing an AI “wingman” feature for its LGBTQ+ user base.

- The AI assists with finding partners, suggesting dates, and making reservations.

- Full rollout of the AI wingman is expected by 2027.

Grindr, the popular gay dating app, is testing an AI-powered “wingman” feature designed specifically for its LGBTQ+ user base. This AI wingman functions like a chatbot, helping users find potential long-term partners, recommending date spots, and even making restaurant reservations, according to The Wall Street Journal (WSJ).

The wingman is a type of AI agent, often referred to as a bot. Unlike traditional chatbots that merely answer questions, summarize text, and generate content, these AI agents can actively navigate the internet and perform tasks on our behalf, according to WSJ.

Currently, the feature is being tested by a small group, with plans to expand to around 1,000 users by the end of the year, and up to 10,000 next year. A full rollout is expected by 2027. Over time, the wingman will evolve to handle more advanced tasks, such as interacting with other AI agents, notes WSJ.

In the future, two AI wingmen could converse after their human users match, providing a “robust view” of each other before they meet in person. This bot-to-bot interaction aims to save users time and potentially highlight early deal-breakers, Grindr CEO George Arison told WSJ.

A significant challenge in developing this AI has been maintaining user privacy, a particularly sensitive issue for Grindr’s community. Many users are not open about their sexuality or live in places where being gay is illegal or stigmatized, notes WSJ.

Privacy concerns are heightened by past issues, including the collection and sale of precise location data from millions of Grindr users since 2017. Grindr stopped sharing location data with ad networks in 2020, reported WSJ.

The company addressed these challenges by partnering with Ex-human, an AI firm specializing in “empathetic AI technology” trained on romantic conversations. Last year, Grindr integrated a clone of Ex-human’s AI model into its system, according to WSJ.

This model is being customized to better reflect queer culture by incorporating Grindr’s own data and slang. Arison explained that Ex-human’s original model was primarily trained on straight romantic conversations, and Grindr is working to “make it more gay,” reported WSJ.

To protect user data, Grindr will ask for permission before using chat histories for AI training, noted WSJ.

The company has also implemented safeguards to prevent misuse, such as blocking the wingman from engaging in conversations involving commercial activity or solicitation, an issue identified during testing, reported WSJ.

As Grindr continues to expand this feature, it raises questions about the role of AI in human relationships.

While it may streamline dating by cutting through early-stage hurdles, there’s a risk of over-reliance on technology, potentially diminishing the organic, human elements that make relationships meaningful. The challenge will be for Grindr to ensure its AI enhances, rather than replaces, the genuine connection people seek.

Image by EFF-Graphics, from Wikimedia Commons

AI-Powered Security Cameras In Colorado Schools

- Written by Kiara Fabbri Former Tech News Writer

- Fact-Checked by Justyn Newman Former Lead Cybersecurity Editor

In a Rush? Here are the Quick Facts!

- Nearly 400 AI cameras are deployed in Cheyenne Mountain School District, Colorado Springs.

- Cameras use AI facial-recognition to track individuals based on photos or descriptions

- Critics raise concerns about privacy and AI surveillance disproportionately impacting marginalized students.

Nearly 400 AI-powered cameras are now operational across the Cheyenne Mountain School District in Colorado Springs, according to The Denver Post (TDP). These cameras, equipped with facial-recognition technology, allow school officials to track and identify individuals, sparking debates around privacy and security in schools.

The AI-enabled cameras can detect and track people based on physical characteristics or a photo uploaded into the system, notes TDP. School administrators can, for instance, input a picture of a “person of interest,” and the system will notify them when that individual is captured on camera, providing relevant footage in real time.

The cameras can also locate individuals by description, notes TDP. For example, a principal could search for a student wearing a red shirt and yellow backpack, and the AI system would locate any matching individuals within seconds.

“We need to balance that for potential misuses and overly zealous surveillance. That’s what we’ve been grappling with,” he added, as reported by TDP.

A few Colorado school districts and higher education institutions have adopted AI surveillance technologies to improve student safety. However, a statewide moratorium has blocked most schools from doing the same, though this could change when the ban ends next summer, said TDP.

At the same time, state legislators and experts are debating how best to regulate AI usage in schools, balancing security with concerns about privacy, and the ethics of using AI to monitor children.

TDP notes that last month, Verkada, a California-based security technologies company, held a conference in Denver to showcase its products, which include AI cameras, wireless lockdown systems, and panic buttons.

Representatives from Cheyenne Mountain School District and Aims Community College, both current users of Verkada’s technologies, attended the event to discuss how these tools have improved campus security, said TDP.

Greg Miller, executive director of technology for the Cheyenne Mountain district, highlighted the system’s efficiency:

“It’s been critical in multiple incidents where we can click on a face and know which door that child exited so they can find them and safely make sure they aren’t harming themselves. We can do that in under 30 seconds,” as reported by TDP.

Despite the technology’s potential benefits, there are concerns about its accuracy and impact on marginalized students. The American Civil Liberties Union (ACLU) of Colorado, part of the legislative AI task force, remains skeptical, notes TDP.

“We don’t think that the potential benefits — and there’s not a whole lot of data to prove those exist — outweigh the harms,” said Anaya Robinson, senior policy strategist at the ACLU of Colorado, as reported by TDP.

As the 2025 legislative session approaches, Colorado lawmakers are expected to propose new safeguards to ensure AI technologies in schools are used responsibly.