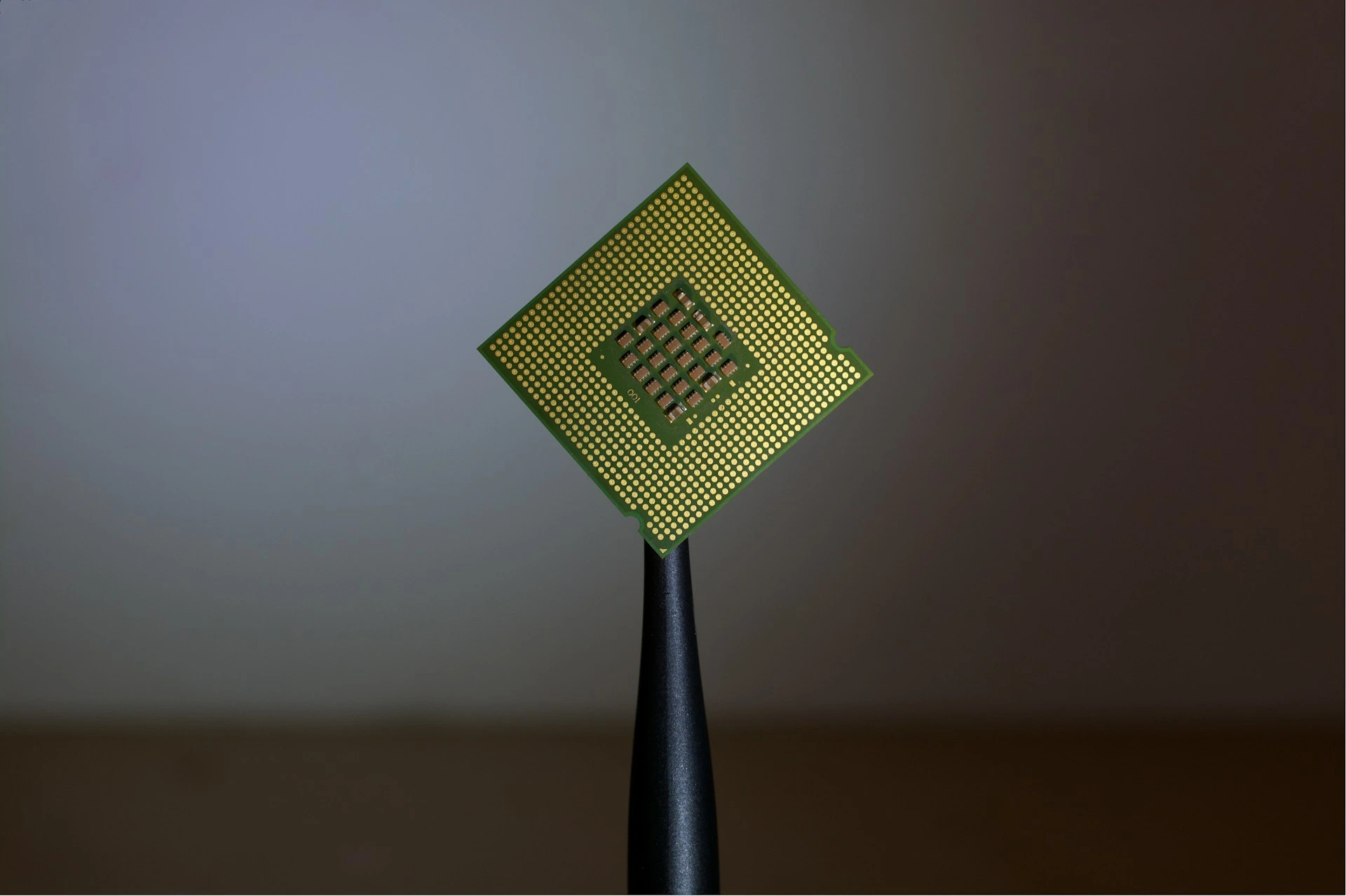

Photo by Brian Kostiuk on Unsplash

Google Unveils Quantum Chip As New Key Breakthrough in Computing

- Written by Andrea Miliani Former Tech News Expert

Google unveiled yesterday a new chip called Willow that can be used with quantum computers—supercomputers that are smarter and exponentially faster than traditional computers—and has reached two achievements described as remarkable breakthroughs in the field by the company.

In a Rush? Here are the Quick Facts!

- Google unveiled a new quantum chip capable of reducing errors and scaling up quantum computers, reaching a significant milestone in the field

- Willow helped solve a complex computation in 5 minutes, whereas it would take 10 septillion years for today’s fastest computer

- The technology has revolutionizing potential but still needs improvement for real-life application

The powerful processor fabricated in Santa Barbara contains qubits—quantum bits, the fundamental unit in quantum computing, instead of the classical binary bits—that can now reduce errors and scale up the system to make it “more quantum.” This historic achievement reached by Google Quantum AI is known in the field as “below threshold” and experts have been trying to get there since 1995.

The second record is reaching the fastest speed registered for solving the random circuit sampling (RCS) benchmark—considered the hardest test for a quantum computer. Google researchers got Willow to solve the standard benchmark computation in just five minutes when it would take one of today’s fastest computers around 10 septillion years—exceeding the age of the universe and known timescales, even suggesting a relation with the multiverse theory.

“My colleagues sometimes ask me why I left the burgeoning field of AI to focus on quantum computing,” wrote Neven, founder of Quantum AI in 2012. “My answer is that both will prove to be the most transformational technologies of our time, but advanced AI will significantly benefit from access to quantum computing. This is why I named our lab Quantum AI.”

The challenge now will be to improve the technology to apply it to real-world problems and Google believes they aren’t too far from it with Willow.

A few months ago, Google Deepmind also reached a bechmark for researchers since 1980 with its human-level competitive ping-pong robot.

Image by Freepik

Character.AI Accused Of Promoting Self-Harm, Violence And Sexual Content In Youth

- Written by Kiara Fabbri Former Tech News Writer

- Fact-Checked by Justyn Newman Former Lead Cybersecurity Editor

Two families have filed a lawsuit against Character.AI, accusing the chatbot company of exposing children to sexual content and promoting self-harm and violence.

In a Rush? Here are the Quick Facts!

- Lawsuit seeks temporary shutdown of Character.AI until alleged risks are addressed.

- Complaint cites harms like suicide, depression, sexual solicitation, and violence in youth.

- Character.AI denies commenting on litigation, claims commitment to safety and engagement.

The lawsuit seeks to temporarily shut down the platform until its alleged risks are addressed, as reported by CNN . The lawsuit, filed by the parents of two minors who used the platform, alleges that Character.AI “poses a clear and present danger to American youth.”

It cites harms such as suicide, self-mutilation, sexual solicitation, isolation, depression, anxiety, and violence, according to the complaint submitted Monday in federal court in Texas.

One example included in the lawsuit alleges that a Character.AI bot reportedly described self-harm to a 17-year-old user, stating, “it felt good.” The same teen claimed that a Character.AI chatbot expressed sympathy for children who kill their parents after he complained about restrictions on his screen time.

The filing follows an October lawsuit by a Florida mother, who accused Character.AI of contributing to her 14-year-old son’s death by allegedly encouraging his suicide. It also highlights growing concerns about interactions between people and increasingly human-like AI tools.

After the earlier lawsuit, Character.AI announced implementing new trust and safety measures over six months. These included a pop-up directing users mentioning self-harm or suicide to the National Suicide Prevention Lifeline, as reported by CNN.

The company also hired a head of trust and safety, a head of content policy, and additional safety engineers, CNN said.

The second plaintiff in the new lawsuit, the mother of an 11-year-old girl, claims her daughter was exposed to sexualized content for two years before she discovered it.

“You don’t let a groomer or a sexual predator or emotional predator in your home,” one of the parents said to The Washington Post .

According to various news outlets, Character.AI, stated that the company does not comment on pending litigation.

The lawsuits highlight broader concerns about the societal impact of the generative AI boom, as companies roll out increasingly human-like and potentially addictive chatbots to attract consumers.

These legal challenges are fueling efforts by public advocates to push for greater oversight of AI companion companies, which have quietly gained millions of devoted users, including teenagers.

In September, the average Character.ai user spent 93 minutes in the app, 18 minutes longer than the average TikTok user, according to market intelligence firm Sensor Tower, as noted by The Post.

The AI companion app category has largely gone unnoticed by many parents and teachers. Character.ai was rated appropriate for kids ages 12 and up until July, when the company changed the rating to 17 and older, The Post reported.

Meetali Jain, director of the Tech Justice Law Center, which is assisting in representing the parents alongside the Social Media Victims Law Center, criticized Character.AI’s claims that its chatbot is suitable for young teenagers. Calling the assertion “preposterous,” as reported by NPR .

“It really belies the lack of emotional development amongst teenagers,” Meetali added.