First Neuralink Patient Plays Online Chess With Only Brain Power

- Written by Elijah Ugoh Cybersecurity & Tech Writer

- Fact-Checked by

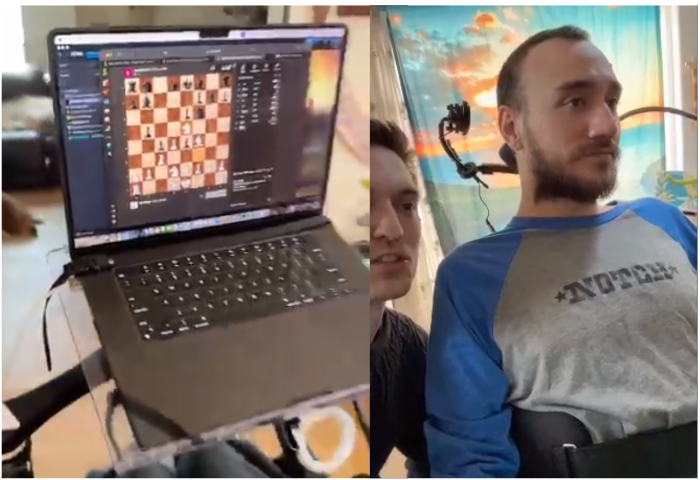

Elon Musk’s neural tech startup, Neuralink, showed a live stream on X last Wednesday of the first human brain-chip user playing online chess with his mind. The chip’s purpose is to help people control a computer cursor or keyboard using only brain power.

Noland Arbaugh, the 29-year-old Neuralink patient, explains in the broadcast that he became a “complete quadriplegic” after a freak diving accident 8 years ago and is paralyzed from below the shoulders. Reuters reported that Noland received the implant from Neuralink in late January and was able to move his computer’s mouse cursor with just his thoughts.

“I love playing chess, and this is one of the things y’all (Neuralink) have enabled me to do. Something that I wasn’t able to really do much of in the last few years, especially not like this,” says Noland in the broadcast. “I have used a mouth stick and stuff, but now, it’s all being done with my brain.”

When asked about how he is able to move his mouse around the computer, Noland mentioned that the company had tried several things with him until it became intuitive for him to imagine his cursor movement. “Every day, it seems like we’re learning new stuff, and I just can’t even describe how cool it is to be able to do this,” he adds.

Arbaugh also said it’s “not perfect” and they “have run into some issues.” He said, “I don’t want people to think that this is the end of the journey, there’s still a lot of work to be done, but it has already changed my life.”

The implant also uses a battery that must be charged, as it runs out with use. “Honestly, the biggest restriction at this point is having to wait for the implant to charge after I’ve used all of it,” says Nolan.

However, Reuters reported that Kip Ludwig, former program director for neural engineering at the U.S. National Institutes of Health, said what Neuralink showed was not a breakthrough.

“It is still in the very early days post-implantation, and there is a lot of learning on both the Neuralink side and the subject’s side to maximize the amount of information for control that can be achieved,” says Ludwig.

Neuralink intends to conduct more tests on more human subjects in the US. The company has also opened its patient registry for clinical trials in Canada .

YouTube Requires Creators to Label AI-Generated Content

- Written by Shipra Sanganeria Cybersecurity & Tech Writer

- Fact-Checked by

YouTube announced the implementation of its new labeling policy for AI-generated content on March 18. The new label in its Creator Studio requires uploaders to disclose “altered or synthetic” content that might be mistaken for a real person, place, or event. The new labels will initially be introduced to its mobile app, followed by desktop and TV in the coming weeks.

In the blog post, the social media platform cited examples that it considers “realistic” and requires AI labeling. For example, altering footage of real events and places, generating realistic-looking scenes, and digitally recreating a real person’s face or voice (using deepfake) to show them saying or doing something they didn’t actually do.

On the other hand, YouTube also said that creators using AI for production or post-production processes, like generating scripts, visual enhancements, and special effects, would be exempt from this disclosure policy. Also exempt is animation and unrealistic (fantasy) content creation.

For most AI-generated videos, the label will appear in the expanded description underneath the video player, but for videos related to real-world issues, like elections, health, finance, and news, YouTube will display a label or watermark on the video itself. Additionally, if a creator doesn’t include an AI label on content that could “confuse or mislead” people, YouTube holds the right to add this itself.

The new AI-labelling requirements come as part of an announcement that came out last November, regarding how YouTube intends to adapt and update its Community Guidelines to protect its users and community from false, manipulated content.

Part of this announcement was adding a new “privacy request” process, where anyone whose face or voice is digitally recreated and used to misrepresent or promote content can request to have the content removed from the platform.

In last week’s policy post, YouTube said that in the future, it also plans to penalize creators who repeatedly avoid disclosing this information.

YouTube follows in other social media platform’s footsteps with the introduction of AI labels on content. But putting all trust in the creators themselves to responsibly label their content might not be enough. It remains to be seen how successful YouTube will be in identifying AI-generated content and enforcing penalty measures for those who don’t comply.