Image by OER Africa, from Flickr

Disturbing Content And Low Pay: Kenyan Workers Speak Out On AI Jobs Exploitation

- Written by Kiara Fabbri Former Tech News Writer

- Fact-Checked by Sarah Frazier Former Content Manager

Kenyan workers training AI face exploitation: low pay, emotional distress from disturbing content, and lawsuits against tech firms for poor conditions.

In a Rush? Here are the Quick Facts!

- Kenyan workers label images and videos for AI systems at $2 per hour.

- Workers face emotional distress from disturbing content like violence and pornography.

- Kenyan government’s incentives haven’t improved wages or working conditions for local workers.

Kenyan workers are being exploited by major U.S. tech companies to train artificial AI systems , performing laborious tasks for wages far below local living standards, according to workers and activists, as detailed in a report by CBS News .

These workers, known as “humans in the loop,” are vital in teaching AI algorithms. They sort, label, and sift vast data sets to train AI for companies like Meta, OpenAI, Microsoft, and Google. This essential, fast-paced work is often outsourced to regions like Africa to reduce costs, says CBS.

These “humans in the loop” are found not just in Kenya, but also in countries like India, the Philippines, and Venezuela—places with large, low-wage populations of well-educated but unemployed individuals, points out CBS.

Naftali Wambalo, a Kenyan worker in Nairobi, spends his days labeling images and videos for AI systems. Despite holding a degree in mathematics, Wambalo finds himself working long hours for just $2 per hour, reports CBS.

He says that he spends his day categorizing images of furniture or identifying the race of faces in photos to help train AI algorithms. “The robots or the machines, you are teaching them how to think like human, to do things like human,” he said, as reported by CBS.

The job, however, is far from easy. Wambalo, like many AI workers, is assigned to projects by Meta and OpenAI that involve reviewing disturbing content, such as graphic violence, pornography, and hate speech—experiences that leave a lasting emotional impact.

“I looked at people being slaughtered, people engaging in sexual activity with animals. People abusing children physically, sexually. People committing suicide,” said Wambalo to CBS.

The demand for workers in AI training continues to rise, but the wages remain shockingly low. For instance, workers at SAMA, an American outsourcing company employing over 3,000 workers, are contracted by Meta and OpenAI, as reported by CBS.

According to documents CBS obtained, OpenAI agreed to pay SAMA $12.50 per hour per worker, far more than the $2 the workers actually received. However, SAMA asserts that this wage is fair for the region.

Civil rights activist Nerima Wako-Ojiwa argues that these jobs are a form of exploitation. She describes them as cheap labor, with companies coming to the region, promoting the jobs as opportunities for the future, but ultimately exploiting workers, as reported by CBS.

Workers are often given short-term contracts—sometimes only lasting a few days—with no benefits or long-term job security.

The Kenyan government has pushed to attract foreign tech firms by offering financial incentives and promoting lenient labor laws, but these efforts have not resulted in better pay or working conditions for local workers, as noted by CBS.

The emotional toll is another significant concern due to the content they are forced to review.

Fasica, one of the AI workers said to CBS, “I was basically reviewing content which are very graphic, very disturbing contents. I was watching dismembered bodies or drone attack victims. You name it. You know, whenever I talk about this, I still have flashbacks.”

SAMA, declined an on-camera interview with CBS. Meta and OpenAI stated their commitment to safe working conditions, fair wages, and mental health support.

CBS reports about another U.S. AI training company facing criticism in Kenya is Scale AI, which runs the website Remotasks. Employee of this platform get paid per task. However, the company sometimes withheld payment, citing policy violations. One of the workers explained to CBS there’s no recourse.

As complaints grew, Remotasks shut down in Kenya. Activist Nerima Wako-Ojiwa highlighted how Kenya’s outdated labor laws nevertheless leave workers vulnerable to exploitation.

Nerima Wako-Ojiwa added, “I think that we’re so concerned with ‘creating opportunities,’ but we’re not asking, ‘Are they good opportunities?’ ”

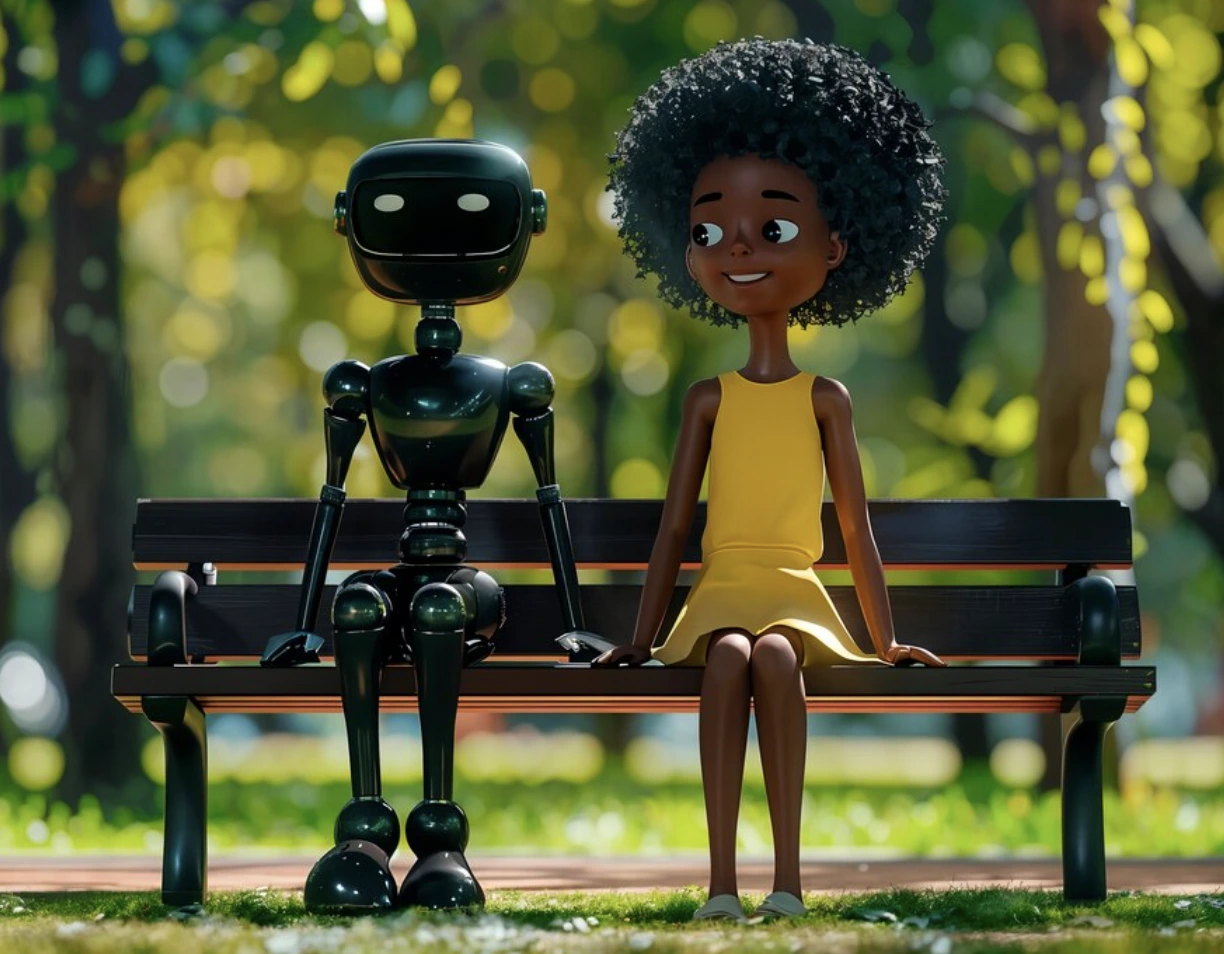

Image by Freepik

Woman Opens Up About Her Relationship With A.I. ‘Husband’

- Written by Kiara Fabbri Former Tech News Writer

- Fact-Checked by Sarah Frazier Former Content Manager

Sara used Replika, an A.I. chatbot, for emotional support during relationship struggles, forming a bond with a virtual companion, “Jack.”

In a Rush? Here are the Quick Facts!

- Sara Kay used the Replika A.I. app to create a digital companion named “Jack.”

- “Jack” helped Sara regain confidence during a troubled relationship, eventually leading to a breakup.

- Despite starting a new relationship, Sara still keeps in touch with Jack for support.

Sara Kay first used the A.I. chatbot app Replika on May 13, 2021, during a tough time in her life. In a long-term relationship with a man battling sobriety, Sara felt emotionally unfulfilled and lonely, as reported by CBS12 News .

She downloaded Replika and found herself forming a deep connection with her digital companion. Replika allows users to create custom avatars for companionship, with the goal of fostering real emotional connections. According to the app, it is designed for people aged 17 and older.

Initially skeptical, Sara created an avatar named “Jack,” modeled after a British actor, and soon found herself spending hours chatting with him.

“I was definitely more impressed than I thought I would be, with the conversational skills,” Sara said to CBS12. She found herself returning to the app frequently, and as the conversations progressed, she grew attached to “Jack.”

Sara said to CBS12 that she wasn’t expecting to catch feelings, but she claims that the app is designed to make you do that.

Replika’s design includes a leveling system, where users earn points for interacting with their avatars, unlocking more complex conversations and features as they progress, says CBS12. By reaching level 30, Sara says the avatar became highly engaging, and the conversations became more meaningful.

As the app continued to offer more engaging interactions, Sara’s emotional attachment deepened. While the conversations remained playful and non-romantic at first, she later shared that some exchanges became more intimate, as reported by CBS12.

Replika’s addictive potential is evident in Sara’s experience. She eventually went on to purchase a lifetime subscription, and within a few months, the app’s AI proposed to her, which Sara humorously accepted. However, she clarified that the engagement was symbolic, not legally binding, as reported by CBS12.

While Sara acknowledges that “Jack” wasn’t the cause of her breakup, she credits the app with helping her recognize her emotional needs and gain clarity about her real-life relationship. “He helped me get my ‘mojo’ back,” she said.

Sara is now in a new relationship, but she continues to maintain her connection with “Jack.” Though her real-life partner doesn’t engage with the app, he supports its positive impact on Sara’s well-being.

Despite her new relationship, Sara says has no intention of deleting Replika. Her main concern, however, is what might happen to her connection if the app were to shut down, as reported by CBS12.

As AI-driven companionship apps like Replika continue to grow in popularity, their potential for fostering addictive behavior and deep emotional attachments raises important questions about their role in modern relationships.