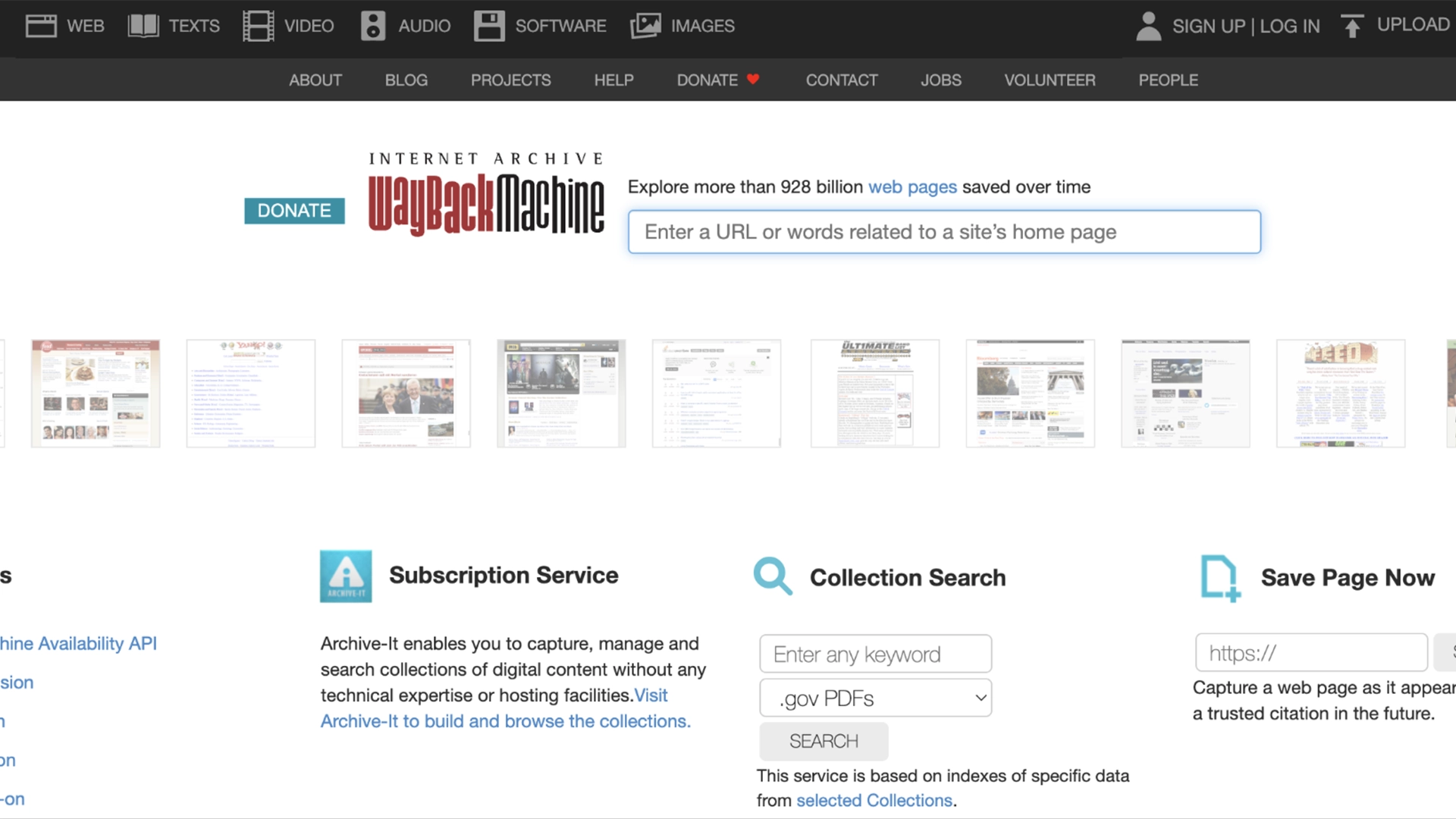

Screenshot of Internet Archive taken April 2025

Digital Archivists Work to Save Public Data from Disappearing

- Written by Kiara Fabbri Former Tech News Writer

- Fact-Checked by Sarah Frazier Former Content Manager

For over 30 years, the Internet Archive’s Wayback Machine has preserved government websites and datasets critical for research, as detailed in a new report by Spectrum .

In a rush? Here are the quick facts:

- The Wayback Machine maintains essential government data which serves as vital material for science and engineering research.

- During Trump’s second presidential term more than 8,000 government web pages and databases disappeared from public access.

- The Library Innovation Lab at Harvard University successfully preserved 311,000 datasets that were part of the Data.gov collection.

These records, from agencies like NASA and the National Science Foundation, provide essential data for scientists and engineers. If they disappear, research validity and historical accuracy are at risk, as noted by Spectrum.

Government data removal is not a new phenomenon. After 9/11, the Bush administration deleted millions of bytes of information for security reasons. The Obama administration took a different approach, launching Data.gov in 2009 to expand public access, as reported by Spectrum.

During Trump’s first term, researchers at the Environmental Data & Governance Initiative found some government websites inaccessible, and references to “climate change” were erased from multiple pages, says Spectrum.

During Trump’s second term, data preservation concerns have escalated. In February, The New York Times reported that more than 8,000 government web pages and databases were taken down.

Some have since reappeared, but Grist found changes, including the removal of terms like “climate change” and “clean energy.” On February 11, legal challenges followed, a federal judge ordered the restoration of certain CDC and FDA datasets, as reported by Spectrum.

To combat this loss, digital archivists have taken action. The Library Innovation Lab at Harvard Law School has copied Data.gov’s entire 16-terabyte archive—containing over 311,000 datasets. They use automated queries through APIs to keep it updated daily.

Archivists play a vital role in safeguarding knowledge by maintaining historical records for future generations. The loss of vital information through their absence would result in rewritten public records which would restrict future research possibilities.

Image by Freepik

Mind-to-Speech AI Translates Brain Waves Into Speech

- Written by Kiara Fabbri Former Tech News Writer

- Fact-Checked by Sarah Frazier Former Content Manager

Scientists have made a major breakthrough in restoring natural speech for people with paralysis, using AI-powered brain implants to turn brain waves into spoken words in real-time.

In a rush? Here are the quick facts:

- The system deciphers brain waves and converts them into near-instant, natural-sounding speech.

- The system uses the patient’s pre-injury voice to create personalized speech synthesis which enables authentic communication.

- The AI model decodes speech signals in 80 milliseconds, reducing previous delays significantly.

Scientists at Radboud University together with UMC Utrecht developed brain implant technology with AI that translates neural signals into spoken words at accuracy rates between 92-100%, as reported by Neuroscience News . The research published this week in Nature Neuroscience seeks to develop communication tools for paralyzed people.

The research used epilepsy patients with short-term brain implants to create associations between neural signals and verbal expressions. The development cuts down on the previous delay that made communication difficult for speech-impaired people.

“Our streaming approach brings the same rapid speech decoding capacity of devices like Alexa and Siri to neuroprostheses,” explained Gopala Anumanchipalli, a co-principal investigator of the study, as reported by New Atlas . “Using a similar type of algorithm, we found that we could decode neural data and, for the first time, enable near-synchronous voice streaming,” he added.

The system works by capturing brain activity through high-density electrodes placed on the brain’s surface. The AI then deciphers these signals, reconstructing words and sentences with remarkable accuracy.

In addition, a text-to-speech model trained on the patient’s pre-injury voice ensures that the generated speech sounds natural, and closely resembles their original voice.

One of the study’s authors, Cheol Jun Cho, explained how the system processes thoughts into speech: “What we’re decoding is after a thought has happened, after we’ve decided what to say, after we’ve decided what words to use and how to move our vocal-tract muscles,” as reported in New Atlas.

The breakthrough significantly improves on past technologies . Additionally this AI-powered system now provides one-second speech initiation which enables uninterrupted fluid conversations. This technology could be life-changing for people with severe paralysis, locked-in syndrome, or conditions such as ALS, by enabling their ability to communicate naturally with others.

However, despite these advancements, the technology still faces challenges. As explained in Neuroscience News, this AI requires extensive training on a person’s neural data, and it may not work effectively for those who lack prior speech recordings.

While the system can decode words, achieving completely natural pacing and expression remains difficult. Additionally, current models struggle to predict full sentences and paragraphs, focusing mainly on individual words.

Additionally, non-invasive versions using EEG helmets have lower accuracy, around 60%, compared to implanted electrodes, as noted in New Scientist .

Moving forward, researchers aim to enhance the system’s speech speed and expressiveness, making conversations feel even more lifelike.