Canva Challenges Adobe’s Digital Design Dominance with Affinity Acquisition

- Written by Shipra Sanganeria Cybersecurity & Tech Writer

- Fact-Checked by

Canva, the web-based design platform, announced its acquisition of the creative software product company, Affinity on Tuesday. Many have already reported that this strategic move heralds Canva’s push to disrupt Adobe’s dominance in the realm of digital design software tools.

With this acquisition, Canva acquired ownership of the UK-based Affinity’s Designer, Photo, and Publisher applications.

While the financial figures for the acquisition were not disclosed by the companies, Bloomberg estimates the deal to be worth “several hundred million pounds.”

Since its launch in 2013, Canva has made numerous acquisitions to expand its foothold in the digital design industry. Despite its claims of attracting more than 170 million users worldwide , the platform has been unable to attract creative professionals like photographers, illustrators, and video editors due to the absence of dedicated design applications.

By acquiring Affinity’s creative suite, a direct competitor to Adobe’s Illustrator, Photoshop, and InDesign software, Canva hopes to fill this gap and tap the global business-to-business digital design market.

“While our last decade at Canva has focused heavily on the 99% of knowledge workers without design training, truly empowering the world to design includes empowering professional designers too,” Cliff Obrecht, Co-Founder and COO at Canva said in the acquisition press release. “By joining forces with Affinity, we’re excited to unlock the full spectrum of designers at every level and stage of the design journey.”

Canva co-founder Cameron Adams in an interview with the Sydney Morning Herald , said that although future integration of both platform offerings cannot be ruled out, Affinity will continue to maintain its distinct identity.

With Affinity’s UK-based team of 90 professionals now part of Canva, it hopes to expand and reach out to the wide spectrum of the design community, particularly users disillusioned with the subscription-based model.

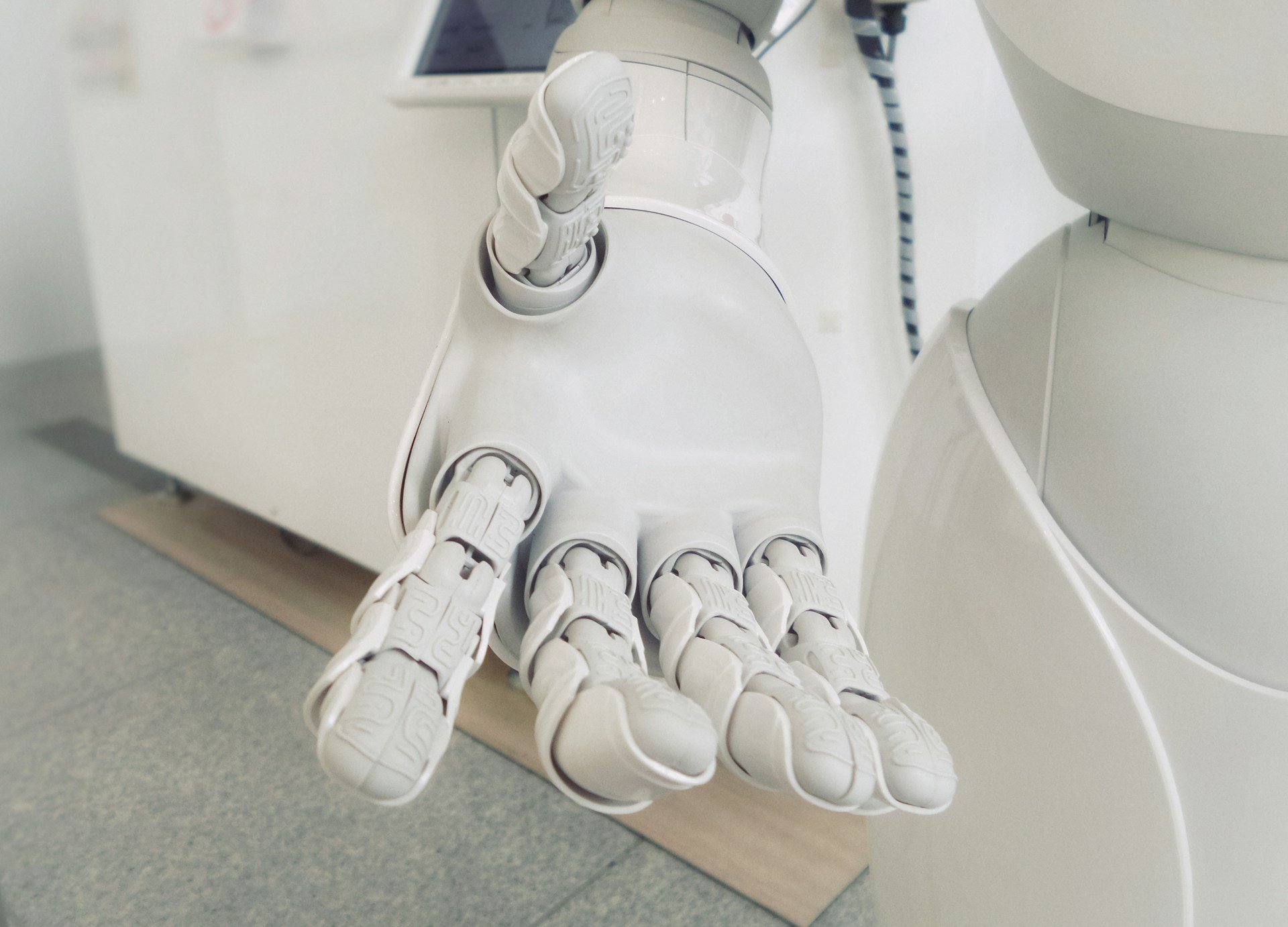

From Speech to Sense: Figure 01’s Breakthrough in Human-Like Conversations

- Written by Deep Shikha Content Writer

- Fact-Checked by

On March 13, the robotics company Figure released a video demonstration of the humanoid robot named Figure 01 engaging in speech-to-speech reasoning with a human , showcasing the robot’s groundbreaking conversational intelligence using visual inputs. Last month, OpenAI partnered with Figure to power Figure 01 with its advanced GPT language models.

The video clearly depicts that Figure 01 can see and react to what’s happening around it, as well as talk in an eerily realistic human way. When asked what it could see, Figure 01 impressively described everything in front of it: a red apple, a dish rack, and the person asking the question. Not only that, the humanoid comprehended the information like a human would.

Brett Adcock shared on X that Figure 01’s cameras provide data to a special OpenAI system, or Visual Language Model (VLM), which helps the robot see and understand its surroundings. In addition, Figure’s technology helps it process images quickly to react to what it sees. OpenAI makes it possible for the robot to understand spoken language.

Adlock also confirmed that Figure 01 operated independently during the demo and that the video was filmed at actual speed.

When asked about its performance, Figure 01 responded with a hint of personality, stuttering before saying, “I-I think I did pretty well. The apple found its new owner, the trash is gone, and the tableware is right where it belongs.” It’s almost as if the robot was taking a moment of pride in its work, showcasing not just technical skill but a glimmer of character in its reply.

Google’s Gemini AI has shown similar skills by recognizing objects like rubber ducks and drawings in front of it, but Figure 01 can grasp the context of visual situations and respond appropriately. For example, it could figure out that an apple was edible and hand it to someone who asked for food, showcasing a significant leap in the advancement of these new VLMs.

Along with Adcock, many experts from Boston Dynamics, Tesla, Google Deep Mind, and Archer Aviation have also put their brains into Figure 01. Adcock aims to create a smart AI system that could control billions of humanoid robots, changing many industries dramatically. This idea suggests a future where robots play a central role in our lives, similar to stories from science fiction.